This is the multi-page printable view of this section. Click here to print.

Security

- 1: Overview of Cloud Native Security

- 2: Pod Security Standards

- 3: Pod Security Admission

- 4: Pod Security Policies

- 5: Security For Windows Nodes

- 6: Controlling Access to the Kubernetes API

1 - Overview of Cloud Native Security

This overview defines a model for thinking about Kubernetes security in the context of Cloud Native security.

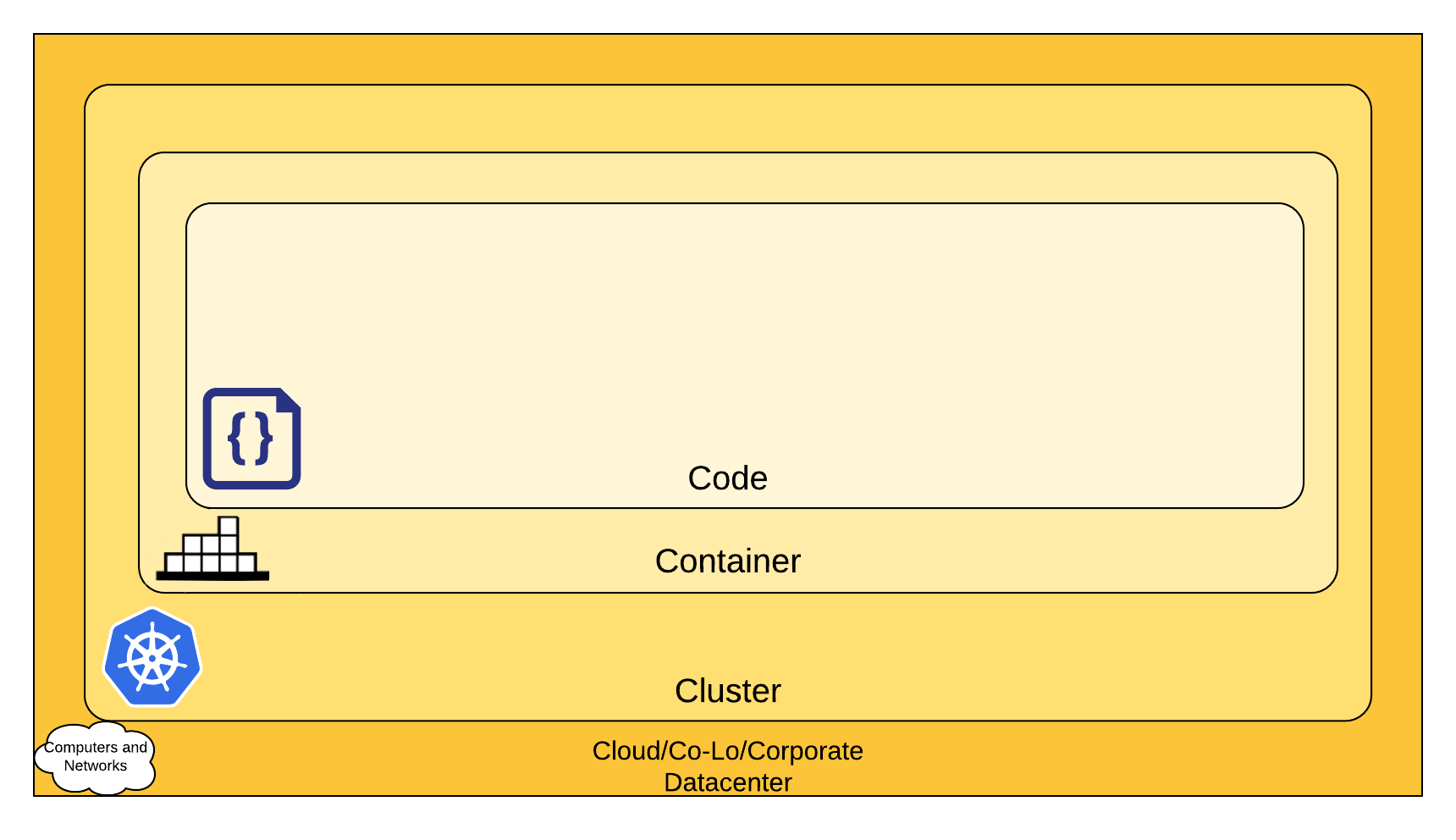

The 4C's of Cloud Native security

You can think about security in layers. The 4C's of Cloud Native security are Cloud, Clusters, Containers, and Code.

The 4C's of Cloud Native Security

Each layer of the Cloud Native security model builds upon the next outermost layer. The Code layer benefits from strong base (Cloud, Cluster, Container) security layers. You cannot safeguard against poor security standards in the base layers by addressing security at the Code level.

Cloud

In many ways, the Cloud (or co-located servers, or the corporate datacenter) is the trusted computing base of a Kubernetes cluster. If the Cloud layer is vulnerable (or configured in a vulnerable way) then there is no guarantee that the components built on top of this base are secure. Each cloud provider makes security recommendations for running workloads securely in their environment.

Cloud provider security

If you are running a Kubernetes cluster on your own hardware or a different cloud provider, consult your documentation for security best practices. Here are links to some of the popular cloud providers' security documentation:

| IaaS Provider | Link |

|---|---|

| Alibaba Cloud | https://www.alibabacloud.com/trust-center |

| Amazon Web Services | https://aws.amazon.com/security/ |

| Google Cloud Platform | https://cloud.google.com/security/ |

| IBM Cloud | https://www.ibm.com/cloud/security |

| Microsoft Azure | https://docs.microsoft.com/en-us/azure/security/azure-security |

| Oracle Cloud Infrastructure | https://www.oracle.com/security/ |

| VMWare VSphere | https://www.vmware.com/security/hardening-guides.html |

Infrastructure security

Suggestions for securing your infrastructure in a Kubernetes cluster:

| Area of Concern for Kubernetes Infrastructure | Recommendation |

|---|---|

| Network access to API Server (Control plane) | All access to the Kubernetes control plane is not allowed publicly on the internet and is controlled by network access control lists restricted to the set of IP addresses needed to administer the cluster. |

| Network access to Nodes (nodes) | Nodes should be configured to only accept connections (via network access control lists) from the control plane on the specified ports, and accept connections for services in Kubernetes of type NodePort and LoadBalancer. If possible, these nodes should not be exposed on the public internet entirely. |

| Kubernetes access to Cloud Provider API | Each cloud provider needs to grant a different set of permissions to the Kubernetes control plane and nodes. It is best to provide the cluster with cloud provider access that follows the principle of least privilege for the resources it needs to administer. The Kops documentation provides information about IAM policies and roles. |

| Access to etcd | Access to etcd (the datastore of Kubernetes) should be limited to the control plane only. Depending on your configuration, you should attempt to use etcd over TLS. More information can be found in the etcd documentation. |

| etcd Encryption | Wherever possible it's a good practice to encrypt all storage at rest, and since etcd holds the state of the entire cluster (including Secrets) its disk should especially be encrypted at rest. |

Cluster

There are two areas of concern for securing Kubernetes:

- Securing the cluster components that are configurable

- Securing the applications which run in the cluster

Components of the Cluster

If you want to protect your cluster from accidental or malicious access and adopt good information practices, read and follow the advice about securing your cluster.

Components in the cluster (your application)

Depending on the attack surface of your application, you may want to focus on specific aspects of security. For example: If you are running a service (Service A) that is critical in a chain of other resources and a separate workload (Service B) which is vulnerable to a resource exhaustion attack, then the risk of compromising Service A is high if you do not limit the resources of Service B. The following table lists areas of security concerns and recommendations for securing workloads running in Kubernetes:

| Area of Concern for Workload Security | Recommendation |

|---|---|

| RBAC Authorization (Access to the Kubernetes API) | https://kubernetes.io/docs/reference/access-authn-authz/rbac/ |

| Authentication | https://kubernetes.io/docs/concepts/security/controlling-access/ |

| Application secrets management (and encrypting them in etcd at rest) | https://kubernetes.io/docs/concepts/configuration/secret/ https://kubernetes.io/docs/tasks/administer-cluster/encrypt-data/ |

| Ensuring that pods meet defined Pod Security Standards | https://kubernetes.io/docs/concepts/security/pod-security-standards/#policy-instantiation |

| Quality of Service (and Cluster resource management) | https://kubernetes.io/docs/tasks/configure-pod-container/quality-service-pod/ |

| Network Policies | https://kubernetes.io/docs/concepts/services-networking/network-policies/ |

| TLS for Kubernetes Ingress | https://kubernetes.io/docs/concepts/services-networking/ingress/#tls |

Container

Container security is outside the scope of this guide. Here are general recommendations and links to explore this topic:

| Area of Concern for Containers | Recommendation |

|---|---|

| Container Vulnerability Scanning and OS Dependency Security | As part of an image build step, you should scan your containers for known vulnerabilities. |

| Image Signing and Enforcement | Sign container images to maintain a system of trust for the content of your containers. |

| Disallow privileged users | When constructing containers, consult your documentation for how to create users inside of the containers that have the least level of operating system privilege necessary in order to carry out the goal of the container. |

| Use container runtime with stronger isolation | Select container runtime classes that provide stronger isolation |

Code

Application code is one of the primary attack surfaces over which you have the most control. While securing application code is outside of the Kubernetes security topic, here are recommendations to protect application code:

Code security

| Area of Concern for Code | Recommendation |

|---|---|

| Access over TLS only | If your code needs to communicate by TCP, perform a TLS handshake with the client ahead of time. With the exception of a few cases, encrypt everything in transit. Going one step further, it's a good idea to encrypt network traffic between services. This can be done through a process known as mutual TLS authentication or mTLS which performs a two sided verification of communication between two certificate holding services. |

| Limiting port ranges of communication | This recommendation may be a bit self-explanatory, but wherever possible you should only expose the ports on your service that are absolutely essential for communication or metric gathering. |

| 3rd Party Dependency Security | It is a good practice to regularly scan your application's third party libraries for known security vulnerabilities. Each programming language has a tool for performing this check automatically. |

| Static Code Analysis | Most languages provide a way for a snippet of code to be analyzed for any potentially unsafe coding practices. Whenever possible you should perform checks using automated tooling that can scan codebases for common security errors. Some of the tools can be found at: https://owasp.org/www-community/Source_Code_Analysis_Tools |

| Dynamic probing attacks | There are a few automated tools that you can run against your service to try some of the well known service attacks. These include SQL injection, CSRF, and XSS. One of the most popular dynamic analysis tools is the OWASP Zed Attack proxy tool. |

What's next

Learn about related Kubernetes security topics:

2 - Pod Security Standards

The Pod Security Standards define three different policies to broadly cover the security spectrum. These policies are cumulative and range from highly-permissive to highly-restrictive. This guide outlines the requirements of each policy.

| Profile | Description |

|---|---|

| Privileged | Unrestricted policy, providing the widest possible level of permissions. This policy allows for known privilege escalations. |

| Baseline | Minimally restrictive policy which prevents known privilege escalations. Allows the default (minimally specified) Pod configuration. |

| Restricted | Heavily restricted policy, following current Pod hardening best practices. |

Profile Details

Privileged

The Privileged policy is purposely-open, and entirely unrestricted. This type of policy is typically aimed at system- and infrastructure-level workloads managed by privileged, trusted users.

The Privileged policy is defined by an absence of restrictions. For allow-by-default enforcement mechanisms (such as gatekeeper), the Privileged policy may be an absence of applied constraints rather than an instantiated profile. In contrast, for a deny-by-default mechanism (such as Pod Security Policy) the Privileged policy should enable all controls (disable all restrictions).

Baseline

The Baseline policy is aimed at ease of adoption for common containerized workloads while preventing known privilege escalations. This policy is targeted at application operators and developers of non-critical applications. The following listed controls should be enforced/disallowed:

*) indicate all elements in a list. For example,

spec.containers[*].securityContext refers to the Security Context object for all defined

containers. If any of the listed containers fails to meet the requirements, the entire pod will

fail validation.

| Control | Policy |

|---|---|

| HostProcess |

Windows pods offer the ability to run HostProcess containers which enables privileged access to the Windows node. Privileged access to the host is disallowed in the baseline policy. HostProcess pods are an alpha feature as of Kubernetes v1.22. Restricted Fields

Allowed Values

|

| Host Namespaces |

Sharing the host namespaces must be disallowed. Restricted Fields

Allowed Values

|

| Privileged Containers |

Privileged Pods disable most security mechanisms and must be disallowed. Restricted Fields

Allowed Values

|

| Capabilities |

Adding additional capabilities beyond those listed below must be disallowed. Restricted Fields

Allowed Values

|

| HostPath Volumes |

HostPath volumes must be forbidden. Restricted Fields

Allowed Values

|

| Host Ports |

HostPorts should be disallowed, or at minimum restricted to a known list. Restricted Fields

Allowed Values

|

| AppArmor |

On supported hosts, the Restricted Fields

Allowed Values

|

| SELinux |

Setting the SELinux type is restricted, and setting a custom SELinux user or role option is forbidden. Restricted Fields

Allowed Values

Restricted Fields

Allowed Values

|

/proc Mount Type |

The default Restricted Fields

Allowed Values

|

| Seccomp |

Seccomp profile must not be explicitly set to Restricted Fields

Allowed Values

|

| Sysctls |

Sysctls can disable security mechanisms or affect all containers on a host, and should be disallowed except for an allowed "safe" subset. A sysctl is considered safe if it is namespaced in the container or the Pod, and it is isolated from other Pods or processes on the same Node. Restricted Fields

Allowed Values

|

Restricted

The Restricted policy is aimed at enforcing current Pod hardening best practices, at the expense of some compatibility. It is targeted at operators and developers of security-critical applications, as well as lower-trust users. The following listed controls should be enforced/disallowed:

*) indicate all elements in a list. For example,

spec.containers[*].securityContext refers to the Security Context object for all defined

containers. If any of the listed containers fails to meet the requirements, the entire pod will

fail validation.

| Control | Policy |

| Everything from the baseline profile. | |

| Volume Types |

The restricted policy only permits the following volume types. Restricted Fields

Allowed Values Every item in thespec.volumes[*] list must set one of the following fields to a non-null value:

|

| Privilege Escalation (v1.8+) |

Privilege escalation (such as via set-user-ID or set-group-ID file mode) should not be allowed. Restricted Fields

Allowed Values

|

| Running as Non-root |

Containers must be required to run as non-root users. Restricted Fields

Allowed Values

nil if the pod-level

spec.securityContext.runAsNonRoot is set to true.

|

| Running as Non-root user (v1.23+) |

Containers must not set runAsUser to 0 Restricted Fields

Allowed Values

|

| Seccomp (v1.19+) |

Seccomp profile must be explicitly set to one of the allowed values. Both the Restricted Fields

Allowed Values

nil if the pod-level

spec.securityContext.seccompProfile.type field is set appropriately.

Conversely, the pod-level field may be undefined/nil if _all_ container-

level fields are set.

|

| Capabilities (v1.22+) |

Containers must drop Restricted Fields

Allowed Values

Restricted Fields

Allowed Values

|

Policy Instantiation

Decoupling policy definition from policy instantiation allows for a common understanding and consistent language of policies across clusters, independent of the underlying enforcement mechanism.

As mechanisms mature, they will be defined below on a per-policy basis. The methods of enforcement of individual policies are not defined here.

Pod Security Admission Controller

PodSecurityPolicy (Deprecated)

FAQ

Why isn't there a profile between privileged and baseline?

The three profiles defined here have a clear linear progression from most secure (restricted) to least secure (privileged), and cover a broad set of workloads. Privileges required above the baseline policy are typically very application specific, so we do not offer a standard profile in this niche. This is not to say that the privileged profile should always be used in this case, but that policies in this space need to be defined on a case-by-case basis.

SIG Auth may reconsider this position in the future, should a clear need for other profiles arise.

What's the difference between a security profile and a security context?

Security Contexts configure Pods and Containers at runtime. Security contexts are defined as part of the Pod and container specifications in the Pod manifest, and represent parameters to the container runtime.

Security profiles are control plane mechanisms to enforce specific settings in the Security Context, as well as other related parameters outside the Security Context. As of July 2021, Pod Security Policies are deprecated in favor of the built-in Pod Security Admission Controller.

Other alternatives for enforcing security profiles are being developed in the Kubernetes ecosystem, such as:

What profiles should I apply to my Windows Pods?

Windows in Kubernetes has some limitations and differentiators from standard Linux-based workloads. Specifically, many of the Pod SecurityContext fields have no effect on Windows. As such, no standardized Pod Security profiles currently exist.

If you apply the restricted profile for a Windows pod, this may have an impact on the pod at runtime. The restricted profile requires enforcing Linux-specific restrictions (such as seccomp profile, and disallowing privilege escalation). If the kubelet and / or its container runtime ignore these Linux-specific values, then the Windows pod should still work normally within the restricted profile. However, the lack of enforcement means that there is no additional restriction, for Pods that use Windows containers, compared to the baseline profile.

The use of the HostProcess flag to create a HostProcess pod should only be done in alignment with the privileged policy. Creation of a Windows HostProcess pod is blocked under the baseline and restricted policies, so any HostProcess pod should be considered privileged.

What about sandboxed Pods?

There is not currently an API standard that controls whether a Pod is considered sandboxed or not. Sandbox Pods may be identified by the use of a sandboxed runtime (such as gVisor or Kata Containers), but there is no standard definition of what a sandboxed runtime is.

The protections necessary for sandboxed workloads can differ from others. For example, the need to restrict privileged permissions is lessened when the workload is isolated from the underlying kernel. This allows for workloads requiring heightened permissions to still be isolated.

Additionally, the protection of sandboxed workloads is highly dependent on the method of sandboxing. As such, no single recommended profile is recommended for all sandboxed workloads.

3 - Pod Security Admission

Kubernetes v1.23 [beta]

The Kubernetes Pod Security Standards define different isolation levels for Pods. These standards let you define how you want to restrict the behavior of pods in a clear, consistent fashion.

As a beta feature, Kubernetes offers a built-in Pod Security admission controller, the successor to PodSecurityPolicies. Pod security restrictions are applied at the namespace level when pods are created.

Before you begin

To use this mechanism, your cluster must enforce Pod Security admission.

Built-in Pod Security admission enforcement

In Kubernetes v1.24, the PodSecurity feature gate

is a beta feature and is enabled by default. You must have this feature gate enabled.

If you are running a different version of Kubernetes, consult the documentation for that release.

Alternative: installing the PodSecurity admission webhook

The PodSecurity admission logic is also available as a validating admission webhook. This implementation is also beta.

For environments where the built-in PodSecurity admission plugin cannot be enabled, you can instead enable that logic via a validating admission webhook.

A pre-built container image, certificate generation scripts, and example manifests are available at https://git.k8s.io/pod-security-admission/webhook.

To install:

git clone https://github.com/kubernetes/pod-security-admission.git

cd pod-security-admission/webhook

make certs

kubectl apply -k .

Pod Security levels

Pod Security admission places requirements on a Pod's Security

Context and other related fields according

to the three levels defined by the Pod Security

Standards: privileged, baseline, and

restricted. Refer to the Pod Security Standards

page for an in-depth look at those requirements.

Pod Security Admission labels for namespaces

Once the feature is enabled or the webhook is installed, you can configure namespaces to define the admission control mode you want to use for pod security in each namespace. Kubernetes defines a set of labels that you can set to define which of the predefined Pod Security Standard levels you want to use for a namespace. The label you select defines what action the control plane takes if a potential violation is detected:

| Mode | Description |

|---|---|

| enforce | Policy violations will cause the pod to be rejected. |

| audit | Policy violations will trigger the addition of an audit annotation to the event recorded in the audit log, but are otherwise allowed. |

| warn | Policy violations will trigger a user-facing warning, but are otherwise allowed. |

A namespace can configure any or all modes, or even set a different level for different modes.

For each mode, there are two labels that determine the policy used:

# The per-mode level label indicates which policy level to apply for the mode.

#

# MODE must be one of `enforce`, `audit`, or `warn`.

# LEVEL must be one of `privileged`, `baseline`, or `restricted`.

pod-security.kubernetes.io/<MODE>: <LEVEL>

# Optional: per-mode version label that can be used to pin the policy to the

# version that shipped with a given Kubernetes minor version (for example v1.24).

#

# MODE must be one of `enforce`, `audit`, or `warn`.

# VERSION must be a valid Kubernetes minor version, or `latest`.

pod-security.kubernetes.io/<MODE>-version: <VERSION>

Check out Enforce Pod Security Standards with Namespace Labels to see example usage.

Workload resources and Pod templates

Pods are often created indirectly, by creating a workload object such as a Deployment or Job. The workload object defines a Pod template and a controller for the workload resource creates Pods based on that template. To help catch violations early, both the audit and warning modes are applied to the workload resources. However, enforce mode is not applied to workload resources, only to the resulting pod objects.

Exemptions

You can define exemptions from pod security enforcement in order to allow the creation of pods that would have otherwise been prohibited due to the policy associated with a given namespace. Exemptions can be statically configured in the Admission Controller configuration.

Exemptions must be explicitly enumerated. Requests meeting exemption criteria are ignored by the

Admission Controller (all enforce, audit and warn behaviors are skipped). Exemption dimensions include:

- Usernames: requests from users with an exempt authenticated (or impersonated) username are ignored.

- RuntimeClassNames: pods and workload resources specifying an exempt runtime class name are ignored.

- Namespaces: pods and workload resources in an exempt namespace are ignored.

system:serviceaccount:kube-system:replicaset-controller)

should generally not be exempted, as doing so would implicitly exempt any user that can create the

corresponding workload resource.

Updates to the following pod fields are exempt from policy checks, meaning that if a pod update request only changes these fields, it will not be denied even if the pod is in violation of the current policy level:

- Any metadata updates except changes to the seccomp or AppArmor annotations:

seccomp.security.alpha.kubernetes.io/pod(deprecated)container.seccomp.security.alpha.kubernetes.io/*(deprecated)container.apparmor.security.beta.kubernetes.io/*

- Valid updates to

.spec.activeDeadlineSeconds - Valid updates to

.spec.tolerations

What's next

4 - Pod Security Policies

Kubernetes v1.21 [deprecated]

Pod Security Policies enable fine-grained authorization of pod creation and updates.

What is a Pod Security Policy?

A Pod Security Policy is a cluster-level resource that controls security sensitive aspects of the pod specification. The PodSecurityPolicy objects define a set of conditions that a pod must run with in order to be accepted into the system, as well as defaults for the related fields. They allow an administrator to control the following:

| Control Aspect | Field Names |

|---|---|

| Running of privileged containers | privileged |

| Usage of host namespaces | hostPID, hostIPC |

| Usage of host networking and ports | hostNetwork, hostPorts |

| Usage of volume types | volumes |

| Usage of the host filesystem | allowedHostPaths |

| Allow specific FlexVolume drivers | allowedFlexVolumes |

| Allocating an FSGroup that owns the pod's volumes | fsGroup |

| Requiring the use of a read only root file system | readOnlyRootFilesystem |

| The user and group IDs of the container | runAsUser, runAsGroup, supplementalGroups |

| Restricting escalation to root privileges | allowPrivilegeEscalation, defaultAllowPrivilegeEscalation |

| Linux capabilities | defaultAddCapabilities, requiredDropCapabilities, allowedCapabilities |

| The SELinux context of the container | seLinux |

| The Allowed Proc Mount types for the container | allowedProcMountTypes |

| The AppArmor profile used by containers | annotations |

| The seccomp profile used by containers | annotations |

| The sysctl profile used by containers | forbiddenSysctls,allowedUnsafeSysctls |

Enabling Pod Security Policies

Pod security policy control is implemented as an optional admission controller. PodSecurityPolicies are enforced by enabling the admission controller, but doing so without authorizing any policies will prevent any pods from being created in the cluster.

Since the pod security policy API (policy/v1beta1/podsecuritypolicy) is

enabled independently of the admission controller, for existing clusters it is

recommended that policies are added and authorized before enabling the admission

controller.

Authorizing Policies

When a PodSecurityPolicy resource is created, it does nothing. In order to use

it, the requesting user or target pod's

service account

must be authorized to use the policy, by allowing the use verb on the policy.

Most Kubernetes pods are not created directly by users. Instead, they are typically created indirectly as part of a Deployment, ReplicaSet, or other templated controller via the controller manager. Granting the controller access to the policy would grant access for all pods created by that controller, so the preferred method for authorizing policies is to grant access to the pod's service account (see example).

Via RBAC

RBAC is a standard Kubernetes authorization mode, and can easily be used to authorize use of policies.

First, a Role or ClusterRole needs to grant access to use the desired

policies. The rules to grant access look like this:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: <role name>

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames:

- <list of policies to authorize>

Then the (Cluster)Role is bound to the authorized user(s):

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: <binding name>

roleRef:

kind: ClusterRole

name: <role name>

apiGroup: rbac.authorization.k8s.io

subjects:

# Authorize all service accounts in a namespace (recommended):

- kind: Group

apiGroup: rbac.authorization.k8s.io

name: system:serviceaccounts:<authorized namespace>

# Authorize specific service accounts (not recommended):

- kind: ServiceAccount

name: <authorized service account name>

namespace: <authorized pod namespace>

# Authorize specific users (not recommended):

- kind: User

apiGroup: rbac.authorization.k8s.io

name: <authorized user name>

If a RoleBinding (not a ClusterRoleBinding) is used, it will only grant

usage for pods being run in the same namespace as the binding. This can be

paired with system groups to grant access to all pods run in the namespace:

# Authorize all service accounts in a namespace:

- kind: Group

apiGroup: rbac.authorization.k8s.io

name: system:serviceaccounts

# Or equivalently, all authenticated users in a namespace:

- kind: Group

apiGroup: rbac.authorization.k8s.io

name: system:authenticated

For more examples of RBAC bindings, see RoleBinding examples. For a complete example of authorizing a PodSecurityPolicy, see below.

Recommended Practice

PodSecurityPolicy is being replaced by a new, simplified PodSecurity

admission controller.

For more details on this change, see

PodSecurityPolicy Deprecation: Past, Present, and Future.

Follow these guidelines to simplify migration from PodSecurityPolicy to the

new admission controller:

-

Limit your PodSecurityPolicies to the policies defined by the Pod Security Standards:

-

Only bind PSPs to entire namespaces, by using the

system:serviceaccounts:<namespace>group (where<namespace>is the target namespace). For example:apiVersion: rbac.authorization.k8s.io/v1 # This cluster role binding allows all pods in the "development" namespace to use the baseline PSP. kind: ClusterRoleBinding metadata: name: psp-baseline-namespaces roleRef: kind: ClusterRole name: psp-baseline apiGroup: rbac.authorization.k8s.io subjects: - kind: Group name: system:serviceaccounts:development apiGroup: rbac.authorization.k8s.io - kind: Group name: system:serviceaccounts:canary apiGroup: rbac.authorization.k8s.io

Troubleshooting

-

The controller manager must be run against the secured API port and must not have superuser permissions. See Controlling Access to the Kubernetes API to learn about API server access controls.

If the controller manager connected through the trusted API port (also known as thelocalhostlistener), requests would bypass authentication and authorization modules; all PodSecurityPolicy objects would be allowed, and users would be able to create grant themselves the ability to create privileged containers.For more details on configuring controller manager authorization, see Controller Roles.

Policy Order

In addition to restricting pod creation and update, pod security policies can also be used to provide default values for many of the fields that it controls. When multiple policies are available, the pod security policy controller selects policies according to the following criteria:

- PodSecurityPolicies which allow the pod as-is, without changing defaults or mutating the pod, are preferred. The order of these non-mutating PodSecurityPolicies doesn't matter.

- If the pod must be defaulted or mutated, the first PodSecurityPolicy (ordered by name) to allow the pod is selected.

Example

This example assumes you have a running cluster with the PodSecurityPolicy admission controller enabled and you have cluster admin privileges.

Set up

Set up a namespace and a service account to act as for this example. We'll use this service account to mock a non-admin user.

kubectl create namespace psp-example

kubectl create serviceaccount -n psp-example fake-user

kubectl create rolebinding -n psp-example fake-editor --clusterrole=edit --serviceaccount=psp-example:fake-user

To make it clear which user we're acting as and save some typing, create 2 aliases:

alias kubectl-admin='kubectl -n psp-example'

alias kubectl-user='kubectl --as=system:serviceaccount:psp-example:fake-user -n psp-example'

Create a policy and a pod

Define the example PodSecurityPolicy object in a file. This is a policy that prevents the creation of privileged pods. The name of a PodSecurityPolicy object must be a valid DNS subdomain name.

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: example

spec:

privileged: false # Don't allow privileged pods!

# The rest fills in some required fields.

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

runAsUser:

rule: RunAsAny

fsGroup:

rule: RunAsAny

volumes:

- '*'

And create it with kubectl:

kubectl-admin create -f example-psp.yaml

Now, as the unprivileged user, try to create a simple pod:

kubectl-user create -f- <<EOF

apiVersion: v1

kind: Pod

metadata:

name: pause

spec:

containers:

- name: pause

image: k8s.gcr.io/pause

EOF

The output is similar to this:

Error from server (Forbidden): error when creating "STDIN": pods "pause" is forbidden: unable to validate against any pod security policy: []

What happened? Although the PodSecurityPolicy was created, neither the

pod's service account nor fake-user have permission to use the new policy:

kubectl-user auth can-i use podsecuritypolicy/example

no

Create the rolebinding to grant fake-user the use verb on the example

policy:

kubectl-admin create role psp:unprivileged \

--verb=use \

--resource=podsecuritypolicy \

--resource-name=example

role "psp:unprivileged" created

kubectl-admin create rolebinding fake-user:psp:unprivileged \

--role=psp:unprivileged \

--serviceaccount=psp-example:fake-user

rolebinding "fake-user:psp:unprivileged" created

kubectl-user auth can-i use podsecuritypolicy/example

yes

Now retry creating the pod:

kubectl-user create -f- <<EOF

apiVersion: v1

kind: Pod

metadata:

name: pause

spec:

containers:

- name: pause

image: k8s.gcr.io/pause

EOF

The output is similar to this

pod "pause" created

It works as expected! But any attempts to create a privileged pod should still be denied:

kubectl-user create -f- <<EOF

apiVersion: v1

kind: Pod

metadata:

name: privileged

spec:

containers:

- name: pause

image: k8s.gcr.io/pause

securityContext:

privileged: true

EOF

The output is similar to this:

Error from server (Forbidden): error when creating "STDIN": pods "privileged" is forbidden: unable to validate against any pod security policy: [spec.containers[0].securityContext.privileged: Invalid value: true: Privileged containers are not allowed]

Delete the pod before moving on:

kubectl-user delete pod pause

Run another pod

Let's try that again, slightly differently:

kubectl-user create deployment pause --image=k8s.gcr.io/pause

deployment "pause" created

kubectl-user get pods

No resources found.

kubectl-user get events | head -n 2

LASTSEEN FIRSTSEEN COUNT NAME KIND SUBOBJECT TYPE REASON SOURCE MESSAGE

1m 2m 15 pause-7774d79b5 ReplicaSet Warning FailedCreate replicaset-controller Error creating: pods "pause-7774d79b5-" is forbidden: no providers available to validate pod request

What happened? We already bound the psp:unprivileged role for our fake-user,

why are we getting the error Error creating: pods "pause-7774d79b5-" is forbidden: no providers available to validate pod request? The answer lies in

the source - replicaset-controller. Fake-user successfully created the

deployment (which successfully created a replicaset), but when the replicaset

went to create the pod it was not authorized to use the example

podsecuritypolicy.

In order to fix this, bind the psp:unprivileged role to the pod's service

account instead. In this case (since we didn't specify it) the service account

is default:

kubectl-admin create rolebinding default:psp:unprivileged \

--role=psp:unprivileged \

--serviceaccount=psp-example:default

rolebinding "default:psp:unprivileged" created

Now if you give it a minute to retry, the replicaset-controller should eventually succeed in creating the pod:

kubectl-user get pods --watch

NAME READY STATUS RESTARTS AGE

pause-7774d79b5-qrgcb 0/1 Pending 0 1s

pause-7774d79b5-qrgcb 0/1 Pending 0 1s

pause-7774d79b5-qrgcb 0/1 ContainerCreating 0 1s

pause-7774d79b5-qrgcb 1/1 Running 0 2s

Clean up

Delete the namespace to clean up most of the example resources:

kubectl-admin delete ns psp-example

namespace "psp-example" deleted

Note that PodSecurityPolicy resources are not namespaced, and must be cleaned

up separately:

kubectl-admin delete psp example

podsecuritypolicy "example" deleted

Example Policies

This is the least restrictive policy you can create, equivalent to not using the pod security policy admission controller:

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: privileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: '*'

spec:

privileged: true

allowPrivilegeEscalation: true

allowedCapabilities:

- '*'

volumes:

- '*'

hostNetwork: true

hostPorts:

- min: 0

max: 65535

hostIPC: true

hostPID: true

runAsUser:

rule: 'RunAsAny'

seLinux:

rule: 'RunAsAny'

supplementalGroups:

rule: 'RunAsAny'

fsGroup:

rule: 'RunAsAny'

This is an example of a restrictive policy that requires users to run as an unprivileged user, blocks possible escalations to root, and requires use of several security mechanisms.

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: restricted

annotations:

# docker/default identifies a profile for seccomp, but it is not particularly tied to the Docker runtime

seccomp.security.alpha.kubernetes.io/allowedProfileNames: 'docker/default,runtime/default'

apparmor.security.beta.kubernetes.io/allowedProfileNames: 'runtime/default'

apparmor.security.beta.kubernetes.io/defaultProfileName: 'runtime/default'

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

requiredDropCapabilities:

- ALL

# Allow core volume types.

volumes:

- 'configMap'

- 'emptyDir'

- 'projected'

- 'secret'

- 'downwardAPI'

# Assume that ephemeral CSI drivers & persistentVolumes set up by the cluster admin are safe to use.

- 'csi'

- 'persistentVolumeClaim'

- 'ephemeral'

hostNetwork: false

hostIPC: false

hostPID: false

runAsUser:

# Require the container to run without root privileges.

rule: 'MustRunAsNonRoot'

seLinux:

# This policy assumes the nodes are using AppArmor rather than SELinux.

rule: 'RunAsAny'

supplementalGroups:

rule: 'MustRunAs'

ranges:

# Forbid adding the root group.

- min: 1

max: 65535

fsGroup:

rule: 'MustRunAs'

ranges:

# Forbid adding the root group.

- min: 1

max: 65535

readOnlyRootFilesystem: false

See Pod Security Standards for more examples.

Policy Reference

Privileged

Privileged - determines if any container in a pod can enable privileged mode. By default a container is not allowed to access any devices on the host, but a "privileged" container is given access to all devices on the host. This allows the container nearly all the same access as processes running on the host. This is useful for containers that want to use linux capabilities like manipulating the network stack and accessing devices.

Host namespaces

HostPID - Controls whether the pod containers can share the host process ID namespace. Note that when paired with ptrace this can be used to escalate privileges outside of the container (ptrace is forbidden by default).

HostIPC - Controls whether the pod containers can share the host IPC namespace.

HostNetwork - Controls whether the pod may use the node network namespace. Doing so gives the pod access to the loopback device, services listening on localhost, and could be used to snoop on network activity of other pods on the same node.

HostPorts - Provides a list of ranges of allowable ports in the host

network namespace. Defined as a list of HostPortRange, with min(inclusive)

and max(inclusive). Defaults to no allowed host ports.

Volumes and file systems

Volumes - Provides a list of allowed volume types. The allowable values

correspond to the volume sources that are defined when creating a volume. For

the complete list of volume types, see Types of

Volumes. Additionally,

* may be used to allow all volume types.

The recommended minimum set of allowed volumes for new PSPs are:

configMapdownwardAPIemptyDirpersistentVolumeClaimsecretprojected

PersistentVolume objects that

may be referenced by a PersistentVolumeClaim, and hostPath type

PersistentVolumes do not support read-only access mode. Only trusted users

should be granted permission to create PersistentVolume objects.

FSGroup - Controls the supplemental group applied to some volumes.

- MustRunAs - Requires at least one

rangeto be specified. Uses the minimum value of the first range as the default. Validates against all ranges. - MayRunAs - Requires at least one

rangeto be specified. AllowsFSGroupsto be left unset without providing a default. Validates against all ranges ifFSGroupsis set. - RunAsAny - No default provided. Allows any

fsGroupID to be specified.

AllowedHostPaths - This specifies a list of host paths that are allowed

to be used by hostPath volumes. An empty list means there is no restriction on

host paths used. This is defined as a list of objects with a single pathPrefix

field, which allows hostPath volumes to mount a path that begins with an

allowed prefix, and a readOnly field indicating it must be mounted read-only.

For example:

allowedHostPaths:

# This allows "/foo", "/foo/", "/foo/bar" etc., but

# disallows "/fool", "/etc/foo" etc.

# "/foo/../" is never valid.

- pathPrefix: "/foo"

readOnly: true # only allow read-only mounts

There are many ways a container with unrestricted access to the host filesystem can escalate privileges, including reading data from other containers, and abusing the credentials of system services, such as Kubelet.

Writeable hostPath directory volumes allow containers to write

to the filesystem in ways that let them traverse the host filesystem outside the pathPrefix.

readOnly: true, available in Kubernetes 1.11+, must be used on all allowedHostPaths

to effectively limit access to the specified pathPrefix.

ReadOnlyRootFilesystem - Requires that containers must run with a read-only root filesystem (i.e. no writable layer).

FlexVolume drivers

This specifies a list of FlexVolume drivers that are allowed to be used

by flexvolume. An empty list or nil means there is no restriction on the drivers.

Please make sure volumes field contains the

flexVolume volume type; no FlexVolume driver is allowed otherwise.

For example:

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: allow-flex-volumes

spec:

# ... other spec fields

volumes:

- flexVolume

allowedFlexVolumes:

- driver: example/lvm

- driver: example/cifs

Users and groups

RunAsUser - Controls which user ID the containers are run with.

- MustRunAs - Requires at least one

rangeto be specified. Uses the minimum value of the first range as the default. Validates against all ranges. - MustRunAsNonRoot - Requires that the pod be submitted with a non-zero

runAsUseror have theUSERdirective defined (using a numeric UID) in the image. Pods which have specified neitherrunAsNonRootnorrunAsUsersettings will be mutated to setrunAsNonRoot=true, thus requiring a defined non-zero numericUSERdirective in the container. No default provided. SettingallowPrivilegeEscalation=falseis strongly recommended with this strategy. - RunAsAny - No default provided. Allows any

runAsUserto be specified.

RunAsGroup - Controls which primary group ID the containers are run with.

- MustRunAs - Requires at least one

rangeto be specified. Uses the minimum value of the first range as the default. Validates against all ranges. - MayRunAs - Does not require that RunAsGroup be specified. However, when RunAsGroup is specified, they have to fall in the defined range.

- RunAsAny - No default provided. Allows any

runAsGroupto be specified.

SupplementalGroups - Controls which group IDs containers add.

- MustRunAs - Requires at least one

rangeto be specified. Uses the minimum value of the first range as the default. Validates against all ranges. - MayRunAs - Requires at least one

rangeto be specified. AllowssupplementalGroupsto be left unset without providing a default. Validates against all ranges ifsupplementalGroupsis set. - RunAsAny - No default provided. Allows any

supplementalGroupsto be specified.

Privilege Escalation

These options control the allowPrivilegeEscalation container option. This bool

directly controls whether the

no_new_privs

flag gets set on the container process. This flag will prevent setuid binaries

from changing the effective user ID, and prevent files from enabling extra

capabilities (e.g. it will prevent the use of the ping tool). This behavior is

required to effectively enforce MustRunAsNonRoot.

AllowPrivilegeEscalation - Gates whether or not a user is allowed to set the

security context of a container to allowPrivilegeEscalation=true. This

defaults to allowed so as to not break setuid binaries. Setting it to false

ensures that no child process of a container can gain more privileges than its parent.

DefaultAllowPrivilegeEscalation - Sets the default for the

allowPrivilegeEscalation option. The default behavior without this is to allow

privilege escalation so as to not break setuid binaries. If that behavior is not

desired, this field can be used to default to disallow, while still permitting

pods to request allowPrivilegeEscalation explicitly.

Capabilities

Linux capabilities provide a finer grained breakdown of the privileges traditionally associated with the superuser. Some of these capabilities can be used to escalate privileges or for container breakout, and may be restricted by the PodSecurityPolicy. For more details on Linux capabilities, see capabilities(7).

The following fields take a list of capabilities, specified as the capability

name in ALL_CAPS without the CAP_ prefix.

AllowedCapabilities - Provides a list of capabilities that are allowed to be added

to a container. The default set of capabilities are implicitly allowed. The

empty set means that no additional capabilities may be added beyond the default

set. * can be used to allow all capabilities.

RequiredDropCapabilities - The capabilities which must be dropped from

containers. These capabilities are removed from the default set, and must not be

added. Capabilities listed in RequiredDropCapabilities must not be included in

AllowedCapabilities or DefaultAddCapabilities.

DefaultAddCapabilities - The capabilities which are added to containers by default, in addition to the runtime defaults. See the documentation for your container runtime for information on working with Linux capabilities.

SELinux

- MustRunAs - Requires

seLinuxOptionsto be configured. UsesseLinuxOptionsas the default. Validates againstseLinuxOptions. - RunAsAny - No default provided. Allows any

seLinuxOptionsto be specified.

AllowedProcMountTypes

allowedProcMountTypes is a list of allowed ProcMountTypes.

Empty or nil indicates that only the DefaultProcMountType may be used.

DefaultProcMount uses the container runtime defaults for readonly and masked

paths for /proc. Most container runtimes mask certain paths in /proc to avoid

accidental security exposure of special devices or information. This is denoted

as the string Default.

The only other ProcMountType is UnmaskedProcMount, which bypasses the

default masking behavior of the container runtime and ensures the newly

created /proc the container stays intact with no modifications. This is

denoted as the string Unmasked.

AppArmor

Controlled via annotations on the PodSecurityPolicy. Refer to the AppArmor documentation.

Seccomp

As of Kubernetes v1.19, you can use the seccompProfile field in the

securityContext of Pods or containers to

control use of seccomp profiles.

In prior versions, seccomp was controlled by adding annotations to a Pod. The

same PodSecurityPolicies can be used with either version to enforce how these

fields or annotations are applied.

seccomp.security.alpha.kubernetes.io/defaultProfileName - Annotation that specifies the default seccomp profile to apply to containers. Possible values are:

-

unconfined- Seccomp is not applied to the container processes (this is the default in Kubernetes), if no alternative is provided. -

runtime/default- The default container runtime profile is used. -

docker/default- The Docker default seccomp profile is used. Deprecated as of Kubernetes 1.11. Useruntime/defaultinstead. -

localhost/<path>- Specify a profile as a file on the node located at<seccomp_root>/<path>, where<seccomp_root>is defined via the--seccomp-profile-rootflag on the Kubelet. If the--seccomp-profile-rootflag is not defined, the default path will be used, which is<root-dir>/seccompwhere<root-dir>is specified by the--root-dirflag.Note: The--seccomp-profile-rootflag is deprecated since Kubernetes v1.19. Users are encouraged to use the default path.

seccomp.security.alpha.kubernetes.io/allowedProfileNames - Annotation that

specifies which values are allowed for the pod seccomp annotations. Specified as

a comma-delimited list of allowed values. Possible values are those listed

above, plus * to allow all profiles. Absence of this annotation means that the

default cannot be changed.

Sysctl

By default, all safe sysctls are allowed.

forbiddenSysctls- excludes specific sysctls. You can forbid a combination of safe and unsafe sysctls in the list. To forbid setting any sysctls, use*on its own.allowedUnsafeSysctls- allows specific sysctls that had been disallowed by the default list, so long as these are not listed inforbiddenSysctls.

Refer to the Sysctl documentation.

What's next

-

See PodSecurityPolicy Deprecation: Past, Present, and Future to learn about the future of pod security policy.

-

See Pod Security Standards for policy recommendations.

-

Refer to PodSecurityPolicy reference for the API details.

5 - Security For Windows Nodes

This page describes security considerations and best practices specific to the Windows operating system.

Protection for Secret data on nodes

On Windows, data from Secrets are written out in clear text onto the node's local storage (as compared to using tmpfs / in-memory filesystems on Linux). As a cluster operator, you should take both of the following additional measures:

- Use file ACLs to secure the Secrets' file location.

- Apply volume-level encryption using BitLocker.

Container users

RunAsUsername can be specified for Windows Pods or containers to execute the container processes as specific user. This is roughly equivalent to RunAsUser.

Windows containers offer two default user accounts, ContainerUser and ContainerAdministrator. The differences between these two user accounts are covered in When to use ContainerAdmin and ContainerUser user accounts within Microsoft's Secure Windows containers documentation.

Local users can be added to container images during the container build process.

- Nano Server based images run as

ContainerUserby default - Server Core based images run as

ContainerAdministratorby default

Windows containers can also run as Active Directory identities by utilizing Group Managed Service Accounts

Pod-level security isolation

Linux-specific pod security context mechanisms (such as SELinux, AppArmor, Seccomp, or custom POSIX capabilities) are not supported on Windows nodes.

Privileged containers are not supported on Windows. Instead HostProcess containers can be used on Windows to perform many of the tasks performed by privileged containers on Linux.

6 - Controlling Access to the Kubernetes API

This page provides an overview of controlling access to the Kubernetes API.

Users access the Kubernetes API using kubectl,

client libraries, or by making REST requests. Both human users and

Kubernetes service accounts can be

authorized for API access.

When a request reaches the API, it goes through several stages, illustrated in the

following diagram:

Transport security

In a typical Kubernetes cluster, the API serves on port 443, protected by TLS. The API server presents a certificate. This certificate may be signed using a private certificate authority (CA), or based on a public key infrastructure linked to a generally recognized CA.

If your cluster uses a private certificate authority, you need a copy of that CA

certificate configured into your ~/.kube/config on the client, so that you can

trust the connection and be confident it was not intercepted.

Your client can present a TLS client certificate at this stage.

Authentication

Once TLS is established, the HTTP request moves to the Authentication step. This is shown as step 1 in the diagram. The cluster creation script or cluster admin configures the API server to run one or more Authenticator modules. Authenticators are described in more detail in Authentication.

The input to the authentication step is the entire HTTP request; however, it typically examines the headers and/or client certificate.

Authentication modules include client certificates, password, and plain tokens, bootstrap tokens, and JSON Web Tokens (used for service accounts).

Multiple authentication modules can be specified, in which case each one is tried in sequence, until one of them succeeds.

If the request cannot be authenticated, it is rejected with HTTP status code 401.

Otherwise, the user is authenticated as a specific username, and the user name

is available to subsequent steps to use in their decisions. Some authenticators

also provide the group memberships of the user, while other authenticators

do not.

While Kubernetes uses usernames for access control decisions and in request logging,

it does not have a User object nor does it store usernames or other information about

users in its API.

Authorization

After the request is authenticated as coming from a specific user, the request must be authorized. This is shown as step 2 in the diagram.

A request must include the username of the requester, the requested action, and the object affected by the action. The request is authorized if an existing policy declares that the user has permissions to complete the requested action.

For example, if Bob has the policy below, then he can read pods only in the namespace projectCaribou:

{

"apiVersion": "abac.authorization.kubernetes.io/v1beta1",

"kind": "Policy",

"spec": {

"user": "bob",

"namespace": "projectCaribou",

"resource": "pods",

"readonly": true

}

}

If Bob makes the following request, the request is authorized because he is allowed to read objects in the projectCaribou namespace:

{

"apiVersion": "authorization.k8s.io/v1beta1",

"kind": "SubjectAccessReview",

"spec": {

"resourceAttributes": {

"namespace": "projectCaribou",

"verb": "get",

"group": "unicorn.example.org",

"resource": "pods"

}

}

}

If Bob makes a request to write (create or update) to the objects in the projectCaribou namespace, his authorization is denied. If Bob makes a request to read (get) objects in a different namespace such as projectFish, then his authorization is denied.

Kubernetes authorization requires that you use common REST attributes to interact with existing organization-wide or cloud-provider-wide access control systems. It is important to use REST formatting because these control systems might interact with other APIs besides the Kubernetes API.

Kubernetes supports multiple authorization modules, such as ABAC mode, RBAC Mode, and Webhook mode. When an administrator creates a cluster, they configure the authorization modules that should be used in the API server. If more than one authorization modules are configured, Kubernetes checks each module, and if any module authorizes the request, then the request can proceed. If all of the modules deny the request, then the request is denied (HTTP status code 403).

To learn more about Kubernetes authorization, including details about creating policies using the supported authorization modules, see Authorization.

Admission control

Admission Control modules are software modules that can modify or reject requests. In addition to the attributes available to Authorization modules, Admission Control modules can access the contents of the object that is being created or modified.

Admission controllers act on requests that create, modify, delete, or connect to (proxy) an object. Admission controllers do not act on requests that merely read objects. When multiple admission controllers are configured, they are called in order.

This is shown as step 3 in the diagram.

Unlike Authentication and Authorization modules, if any admission controller module rejects, then the request is immediately rejected.

In addition to rejecting objects, admission controllers can also set complex defaults for fields.

The available Admission Control modules are described in Admission Controllers.

Once a request passes all admission controllers, it is validated using the validation routines for the corresponding API object, and then written to the object store (shown as step 4).

Auditing

Kubernetes auditing provides a security-relevant, chronological set of records documenting the sequence of actions in a cluster. The cluster audits the activities generated by users, by applications that use the Kubernetes API, and by the control plane itself.

For more information, see Auditing.

API server ports and IPs

The previous discussion applies to requests sent to the secure port of the API server (the typical case). The API server can actually serve on 2 ports:

By default, the Kubernetes API server serves HTTP on 2 ports:

-

localhostport:- is intended for testing and bootstrap, and for other components of the master node (scheduler, controller-manager) to talk to the API

- no TLS

- default is port 8080

- default IP is localhost, change with

--insecure-bind-addressflag. - request bypasses authentication and authorization modules.

- request handled by admission control module(s).

- protected by need to have host access

-

“Secure port”:

- use whenever possible

- uses TLS. Set cert with

--tls-cert-fileand key with--tls-private-key-fileflag. - default is port 6443, change with

--secure-portflag. - default IP is first non-localhost network interface, change with

--bind-addressflag. - request handled by authentication and authorization modules.

- request handled by admission control module(s).

- authentication and authorization modules run.

What's next

Read more documentation on authentication, authorization and API access control:

- Authenticating

- Admission Controllers

- Authorization

- Certificate Signing Requests

- including CSR approval and certificate signing

- Service accounts

You can learn about:

- how Pods can use Secrets to obtain API credentials.